Variants processing just got faster

We have recently spent time optimizing the backend processing of story.to.design and wanted to share some of the results with you. Spoiler alert: it’s much faster. About 7-9x faster in the benchmarks done for this article 🚀

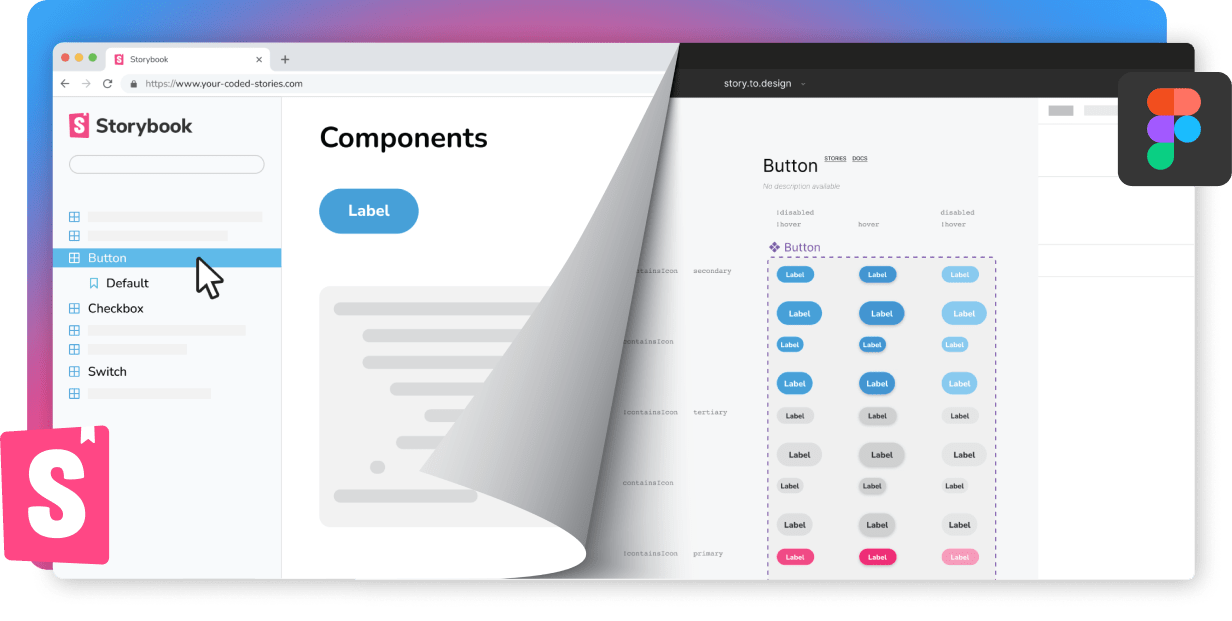

story.to.design, by nature, has to generate hundreds and sometimes thousands of variants for your Figma components. There is some magic involved, like how it transforms the DOM to Figma, but some actual speed is also necessary, as information has to be extracted from your components and all their different states.

Let’s take a quick look at some of the optimizations we did before diving into the results (TL;DR).

Sharing is caring

Not that we pretend to know better, but you may run into similar problems, so we wanted to share some of the things that were slowing us down.

Firestore does not like concurrent writes to the same document

story.to.design is built using Firebase, and implements part of its persistence using Firestore. Firestore best practices do mention it: ‘Pay special attention to the 1 write per second limit for documents’. Not only were we writing to a document many times per second, but we were doing so concurrently and that’s when things started getting really slow - several seconds slow.

To fix this we simply batched our updates: instead of updating a document once per variant, we’re now updating it every N variants.

Playwright and Chrome caching

story.to.design uses Playwright internally. In case you didn’t know, the route feature of Playwright will actually disable Chrome’s cache completely.

As mentioned in their documentation:

Enabling routing disables http cache.

You can imagine how disabling Chrome’s cache can be a problem when “visiting” the same page hundreds of times.

An easy way to get the cache back is to avoid those routing features. Yet in our case, we really did want to use it.

So we found another way.

Playwright is actually the one disabling the cache, for good reason: if a request is cached, it won’t go through your route callbacks. This could be surprising to users, so they instead disabled the cache whenever using that feature.

The way Playwright does that is using Chrome Devtools Protocol, which it relies on quite heavily internally. More specifically, it calls Page.setCacheDisabled.

Well, you can also set it back to false yourself!

const cdpSession = await context.newCDPSession(page);

cdpSession.send('Network.setCacheDisabled', { cacheDisabled: false });Just be mindful that:

- This is on a per-page basis

- Playwright disables it as soon as it can, but only once (i.e.: the first time you use

page.route()or after page creation if theroutewas declared in the context)

When load event is not enough

Playwright has some wrappers around DOMContentLoaded and load events.

page.goto actually defaults to load which is pretty good.

But what if you need to interact with a page you’ve gone to then wait for the page to stabilize?

You could think using Page.waitForLoadState() would do, but it won’t, as the page’s state has already been reached.

We were, to an extent, working around that with an arbitrary wait, but that can be wasted time as there may be nothing to wait for.

Instead we took inspiration from this GitHub comment which suggests tracking requests.

The algorithm then went something like this:

- Interact with the component (hovering over it for example)

- Wait for network idle with our custom implementation

- Wait for a requestAnimationFrame, to allow for rendering frameworks to update the DOM

How much faster did it get?

story.to.design can run either using our servers or using the agent for private Storybooks.

The optimizations described above generally apply to both, so let’s check the results for both cases.

We’ve run these “benchmarks” on https://react.ui.audi/ and its Button component.

Setup

Our servers are Firebase Functions based, and scale depending on the number of variants.

The agent runs locally on your machine, usually benefitting from a more powerful CPU than a single instance of a Firebase function, and allows you to work with private Storybook instances and your local development Storybook using Local mode.

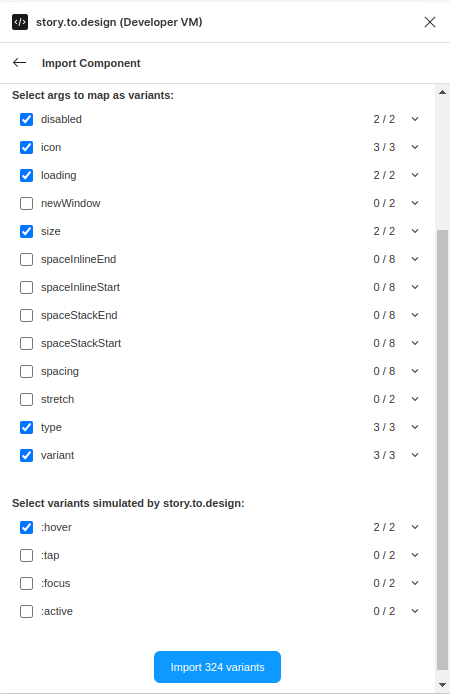

As for the components, our first import will have 324 variants. We’ll be testing it both on our servers and on the agent:

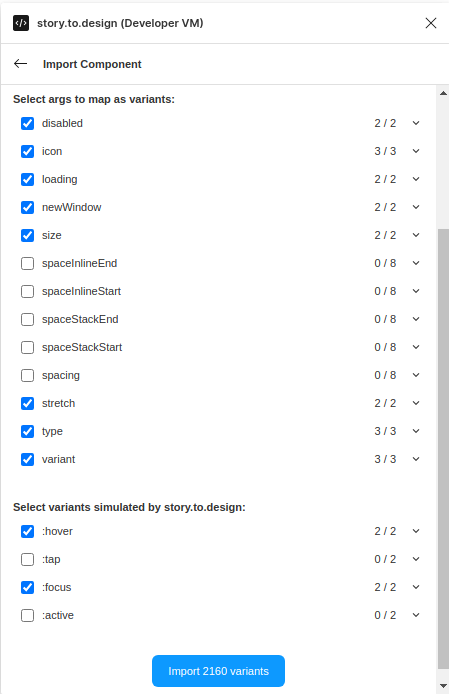

The second configuration will be more complex with 2160 variants and will be tested on our servers:

Results

Processing was about 7-9x faster with these configurations. Your mileage may vary depending on network speed, component frameworks and your own CPU… But this is now undeniably faster 🚀

A final word

Optimizations are tricky, as there’s no end to them. They take time, and have to be prioritized to what will actually bring value to the product.

We’re happy with how much faster story.to.design is now, and hope you will be too!

👋 Hi there!

We built the Figma plugin that keeps your Figma library true-to-code.

story.to.design imports components from Storybook to Figma in seconds.

Try it for free